#9 Oh to Escape Utterly!

This week: Why Erosion Birds matter | On gaussian splatters and NERFs | Buried in the Rock ... plus a special reader offer for Beyond Conference 2023, a futurist playlist and some poetry.

First of all, a massive welcome to this week’s HUGE batch of new readers.

On Thursday morning “Creative R&D” was featured on Monocle’s brilliant Globalist podcast (thanks Isabella!), and I was announced as chairing a panel at this year’s Beyond Conference, and then by the end of the day …. BOOM! Our community had grown by another 10%, with new readers from the US, Canada, Singapore, Ireland, Australia and more.

THANK YOU ❤️. I hope you enjoy reading and listening.

Now, let’s get straight onto this week’s innovation 🔥🔥🔥.

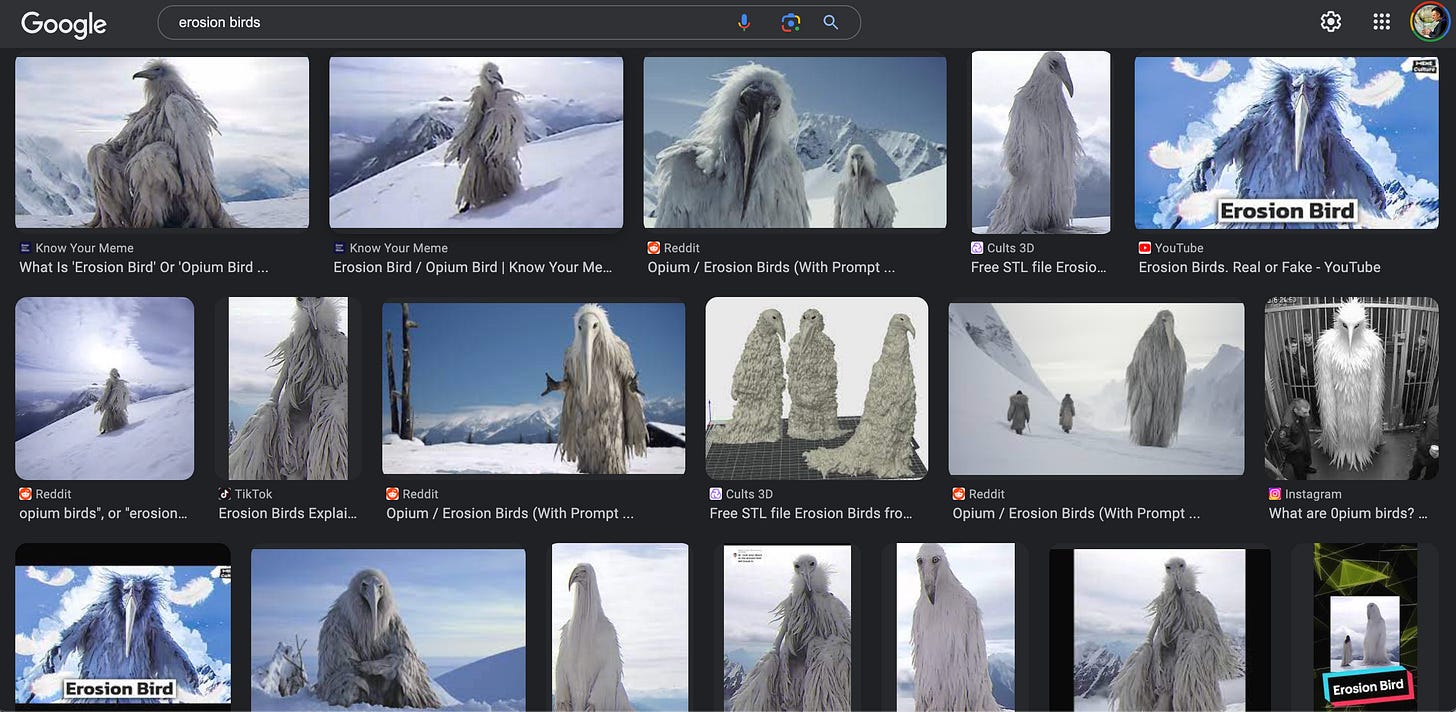

The Digital Infinite // Why Erosion Birds matter

Last week I talked about the Lumen Prize, the annual survey of the best of the best of digital art. It is a brilliant attempt to capture and reward the diversity of a disparate and polyphonic scene.

But it overlooks the critical micro-genre that right now is telling us more about the future of creativity than any other: memes.

The power of memes - like Richard Dawkins first said - is in how they spread through adaptation.

For most of the internet’s life, the adaptation of memes have been fairly minor, endlessly changing the text over a Homer Simpson or a Drake gif.

But generative AI is unleashing a sudden Cambrian age of memetic adaptation - and Erosion Birds are its first, magnificent outcome.

Not seen Erosion Birds yet? Head over to TikTok and disappear down a very cool wormhole.

Erosion Birds (or sometimes Opium Birds) are what you see above - tall, long-beaked bird-humanoids, and pictured hanging out in Antarctica… and made entirely out of generative AI. They started with this single video by @drevfx a couple of months ago and have become a phenomena whose spread just won’t stop.

If you want to get into the detail, there’s a great piece in Hyperallergic that tells their story, and you can track the timeline of their growth on the excellent Know Your Meme.

But my take is this: Erosion Birds matter because they’re different from the memes we’ve known before.

Because, thanks to generative AI, every new meme is unique.

The spread of Erosion Birds isn’t down to text changes on top of an image, or copy and paste dance moves. It’s tens of thousands of people going out onto MidJourney, Stable Diffusion and Runway and creating their own Erosion Birds out of the digital ether. And its Reddit threads teaching people how to do it by reverse engineering what prompts you need to use on Stable Diffusion to make your own:

“photograph of a thin 20 foot tall humanoid creature with a long white flowing yeti robe and a white long beak bird stork mask, long white beak, long flowing plumage, sitting on a snowy plateau, on a snow-covered Siberian mountain, grainy webcam footage, low budget special effects, practical effects, arctic, cryptozoology --ar 16:9 --v 5.2” (Shane Kai Glenn)

These weirdly stylish humanoid birds mark an example of the intense collective creative innovation which could be unleashed through AI-driven production.

We will see much much more of this.

Trying to make sense of what it means, I couldn’t help thinking of the pre-digital culture I grew up around - 1990s rave.

The music writer Simon Reynolds spent much of the ‘90s documenting what he called the “lumpen innovation” of early jungle, hardcore, speed garage and Gabba - the often deeply unfashionable, deeply drug-addled, mostly working class and most rough-edged parts of dance music.

In Energy Flash, his book about that period, he showed how those dance scenes out-innovated more self-consciously “artistic” approaches to electronic music. The collective creative power of 1000 DJs and 10,000 bedroom-based musicians each making small leaps forward in pursuit of new musical styles produced a Dionysian frenzy of newness. And it was all intensely, remarkably futuristic in style and attitude. Everywhere you looked in rave culture, tracks, artists, record labels were squarely futurist in outlook.

That’s the same with today’s Erosion Birds, which are a product of rapid, bottom-up, large-scale collective creative innovation, and also intensely futurist in attitude.

From the start, the internet hive-mind has been claiming that the Erosion Birds memes come from the future - and are a signal of some coming cataclysm we can’t yet understand.

To me their futurist secret is in being always-new, always different, always proliferating. Their power of difference gives them an alien uncanniness i’ve not seen in meme culture before. It does feel like a glimpse of something new - a leap forward in digital creativity that makes all of the Lumen Prize winners feel slightly old-fashioned as result.

Talking about the birds in Hyperallergic,

reminds us that,Memes lack the authorial anchoring of other works of art.

To me it’s this unanchored nature that gives them their power. The Lumen Prize winners are still, one way or other, making “artworks” - fixed things: made by; made for; made with. Erosion Birds are unanchored - a million makers making a million different things. Each iterating on, improving. Different every time.

So, listen up, the future’s squawking.

🐦🐦🐦

Special Reader Offer // Beyond Conference

It’s just a week to go now until the Beyond Conference, our partner for “Creative R&D” this November.

BEYOND is THE leading conference for creative R&D. This year they have another unique programme of talks, discussions, showcases and networking taking place 20-21 November in London.

For Creative R&D newsletter readers, tickets are available at the special rate of £299 - that's a discount of £100! Find out more and book here.

Neo-Digitisation // Gaussian Splatters and NERFs

Next up, let’s talk tech.

When I started this newsletter I’d meant to write about projects, people, companies and technologies. But there’s been so many 🔥🔥🔥 projects to talk about i’ve got distracted.

So let’s fix that.

This week let’s delve into a fast-emerging tech - another AI driven acceleration, inevitably - that provides a new way to make 3d digital objects and environments out of 2d images.

Whilst Gaussian Splatting and NERFs might sound like two early 80s New York punk bands, they are both a way by which 3D models can be made from a very small amount of visual data - as little as a single photo or video still.

I won’t get too nerdy on the technology details - there’s a good summary here and a great presentation here:

The mechanisms for both are evolving fast - whilst they both have deep roots over the last two decades, NERF in its current form has now been around a little while, but Gaussian splatting in its current form was only described in this paper for the first time in August this year.

But their potential is huge, and we should laser in quickly on the opportunities they present for museums and other collection-holding organisations.

For much of the last decade, museum digital collections have seemed on the brink of expanding into 3D. Sometimes that was through high-end LIDAR scanning, more often through photogrammetry - and sometimes it was through well-funded research. But mostly it’s been through bottom-up opportunistic innovation.

One of my favourite projects at the British Museum was building what is still just about the most comprehensive 3D museum collection on 3D platform Sketchfab - a collection which is entirely down to the persistence of Dan Pett (now head of digital at Historic England) and Thomas Flynn (who went on to work for Sketchfab). They just loved making this stuff, and in Sketchfab founder Alban Denoyel found a huge and helpful supporter.

Back in 2015 I was interviewed by Wired about the potential of photogrammetry and the democratised access Sketchfab offered - and was breathlessly excited (I usually am, sorry), declaring that:

“This is one of those moments when technology changes what a museum can be.”

But after nearly a decade of trying, we are barely any closer to having large-scale 3D digitised collections than we were before.

Whilst photogrammetry isn’t hard, it’s hard enough, and produces data of a size and complexity that have prevented its adoption into the “textual metadata plus photo” based processes that underpin collections digitisation.

NERF and Gaussian Splatting might remove that production barrier to entry, and enable the systematic production of 3d digital collections.

Working from single images, or a couple of images, AI extrapolates and interpolates to turn two dimensions into 3. The technology is far from perfect, and like all early stage Generative AI both makes errors and bloats data into oversized files, but its potential is rich:

They could take museum collections with 1 or 2 photos of an object and semi-automatedly create 3d models AT SCALE

That is potentially MASSIVE territory people should start exploring, fast.

I was talking to Rupert Harris, CEO of Preview Tools, about this earlier this week. He set my mouthwatering with this simple demo that shows how single images can become 3d objects.

For all the museum people reading this - think about the possibilities of this. It could be how we build a bridge between collections databases and things like the advanced digital twins I spoke about in issue 3.

This might be how we really unlock a new generation of creativity from museum and heritage digitisation.

So be excited, as a wise man once said, this is one of those moments when technology changes what a museum can be. 😂❤️🔥

Loving your weekly dose of “Creative R&D”? Please consider upgrading to a paid subscription - the more time I have to write this, the better it will be!

Project of the week // Buried in the Rock

This is a GREAT project to flag in a week when we’re talking about the creative potential of 3d scans.

“Buried in the Rock” is a VR film following cavers Tim and Pam Fogg down into Northern Ireland’s Tullyard Cave System. Using LIDAR scanning to “make darkness visible” rather than film deep down in the caves, it looks 🔥🔥🔥🔥

Made by the outstanding Scanlabs, and funded by Storyfutures, this is part of a rich current wave of immersive documentary storytelling.

Track it down in the Storytfutures Xperience network, or at VR fests worldwide.

Bonus feature 1 // Know your poetry!

Read this far? Then you get to find out that this week’s title came from Walt Whitman’s “One Hour to Madness and Joy”:

O to escape utterly from others’ anchors and holds! To drive free! to love free! to dash reckless and dangerous! To court destruction with taunts—with invitations! To ascend—to leap to the heavens of the love indicated to me! To rise thither with my inebriate Soul! To be lost, if it must be so!

I ❤️❤️❤️❤️ Whitman.

Oh, and there was a dash of Milton in the piece about “Buried in the Rock” too.

Bonus feature 2 // Futurist playlist

Writing this newsletter clearly got Spotify thinking.

Opening the app this morning I was presented with this “Futuristic Playlist”, made just for me. Ignoring the data ethics for a second of how it “knew” I’d been writing about this, it’s GOOD:

Enjoy.

See you next week. ❤️🐦🔥

Really interesting issue Chris—esp about the Gaussian Splattering 3D rendering vs photogrammetry. I remember well using the sketchfab images from the British Museum as well as those Boulevard Arts captured with our LiDAR Scanner. We ported those images to create some amazing VR experiences and even won a Microsoft HoloHack for the app we built for HoloLens v1. Museums have so much to be excited about in how they can share their collections and stories—as well as improve their collection management and conservation practices and policies.