#31: The R&D Lab of Digital Aesthetics

This week: Aesthetics.Fandom.Wiki - the R&D lab of digital aesthetics // How an AI-powered movie camera might save film-making // A Chemical Brothers immersive experience, BridgeAI and much more ...

Time to get going with issues 31-40 of the newsletter after a week’s gap for a family event.

This week I’m looking at another one of those random discoveries that’s changed my understanding of the aesthetics of the internet - a hidden back corner that indexes digital style.

Then we’re off into the murk of the ecosystem with your must reads, watches and do’s.

Before we wrap up with a look at a prototype AI film-camera.

TEAM, LET’S DO THIS!

Art: Aesthetics.Fandom.Wiki - A Community R&D Lab

When I wrote issue 24, No-Clipping Out of Reality, I spent a long-time trying to break my own filter bubbles and find my way into the weirder niches of the internet.

It takes more effort than you think.

The algorithms are trying their best to pull you back to the safe ground years of data surveillance has taught them you live in.

But I don’t want to stay there, I want to find out where the new is being shaped.

So stumbling onto Aesthetics.Fandom.Wiki (I’ll call if “AFW” from now on) was a brilliant moment of revelation.

Buried in the back of a news site covering different parts of fandom is a Wiki where the world is chronicling the different features of online aesthetics - naming them, describing their features and how they interconnect across fashion, books, comics, films, TV shows, games, music, social media and more.

Reader, it is rich pickings.

From 2000s Virtual Singer to Auroracore, Dazecore to Frutiger Aero and on, it’s a brilliant resource to see where the apparent random messiness of so much online content is interconnected across creative disciplines, spaces and times.

It has helped me understand things I never would have made sense of otherwise.

To give a wild example: whilst I’m only a TikTok lurker at best, whenever I dip in I get these odd live-streams of crazed drunk Russian dudes doing karaoke.

I have no cultural reference point for this, honestly. All it reminds me of are the brilliant skits centred around Sean Ryder and the Happy Mondays in Michael Winterbottom’s phenomenal film 24 Hour Party People.

But this clearly isn’t where these guys come from AT ALL.

But on AFW I found the references - instantly recognising them as an echo of Gopniks,

a subculture rooted in the working-class neighborhoods and suburban areas of Russia, Ukraine, Belarus, and other post-Soviet countries. The fashion style associated with it began to emerge within lower-income citizens during the 90s, after the fall of the Soviet Union, and respectively decreased in popularity in the 2000s.

It’s a pretty niche example, but this is all about the outsize effects of aesthetic niches - the way they codify digital creativity in ways that might otherwise be close to invisible.

Dipping into the definitions here has helped me see things I’ve made no sense of before in totally new ways.

I’m not the first to notice AFW.

Both the Atlantic and Vox have covered it. When Terry Nguyen mentioned it in a wider piece on trends in 2022, his approach was bluntly critical,

While aesthetic components were once integral to the formation of traditional subcultures, they’ve lost all meaning in this algorithmically driven visual landscape. Instead, subcultural images and attitudes become grouped under a ubiquitous, indefinable label of a “viral trend” — something that can be demystified, mimicked, sold, and bought.

It’s the obvious read - the one always thrown at digital media as an outcrop of high-speed hyper-capitalism.

But wherever this criticism crops up, I want to stand up for the authenticity of bottom-up creative communities.

The culture being documented on AFW is largely pre-commoditisation. It’s a plexi-dish of styles, some half or barely formed, some which have gone on to coalesce and become big business.

The half-formedness is a warning and a recommendation - don’t expect everything you find on AFW to make total sense.

But when it does come together AFW is THE place to go looking for the radical creative styles emergent from the internet, and a critical resource.

It’s nothing less than a Community R&D Lab for the future of digital creativity.

Dive in.

Ideas! // Stories from the digital edge

First up a report I’ve written for the Heart of London Business Alliance (the West End’s Business Improvement District) on the opportunity to bring a new generation of CreaTech businesses into one of the most creative places in the world. If you prefer blogs to reports, here’s a take on it I did for the Creative Economy Newsletter and old partners the Beyond Conference. Can’t read a blog or a report … the video of the launch event is coming soon!

Next up, an AWESOME funding opportunity from the Innovate UK BridgeAI programme at Digital Catapult. This is a 14-week accelerator programme for UK-based startups, scaleups and SMEs to help validate and develop responsible, ethical and desirable AI and ML deep-tech solutions, with a specific focus on the UK's creative sector. The partners are top tier - Ladbible, Bauer Media, Merlin Entertianment and more. There’s a briefing on 3rd July and you can find all about it here.

Diane Drubay’s brilliant WAC Lab programme was our partner for a few months this year. The video below is their demo day and shows the rapid-fire iterative but DEEP thinking about the future of museums this amazing accelerator produces. Exciting stuff.

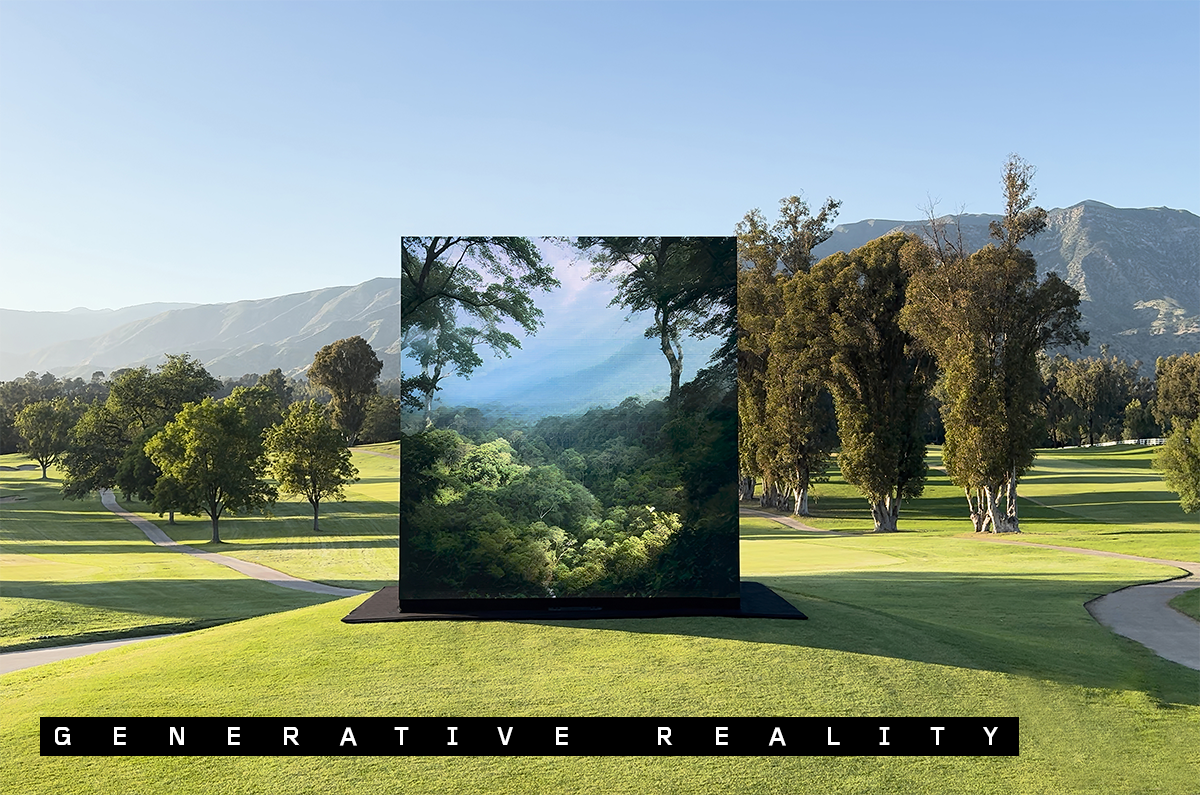

If you’ve not signed up for email updates from Refik Anadol Studio, you should. Apart from making you jealous at all the super-cool stuff the undoubted king of AI art is up to, this month’s edition also features - tucked away at the end - his naming of the next phase of his art: Generative Reality.

Remember where we’re up to here. Refik’s just made the switch from AI art based on GAN’s - relatively small data sets - to his own LLM. Supported by Google and NVIDIA this is him going big. You can read about this in my piece for the Art Newspaper last month. Generative Reality is the concept underpinning all this:

We envision Generative Reality as a complete fusion of technology and art, where artificial intelligence is used to create immersive environments that integrate real-world elements with digital data. These environments feature real-time rendering that merges visual, scent, and other sensory inputs to form fully interactive 3-D worlds, creating a reality that is both shaped by and responsive to AI-human interactions. This concept emphasizes transforming vast data sets into perceptible phenomena and expanding the boundaries of both art and human experience.

It’s a big idea to throw away at the bottom of an email. Really big. And may prove to be the first major artistic statement about art in the age of advanced technologies.

More immersive experiences should be based on music. At least one limb of the medium has its roots deep in 90s club culture and the graphics on the wall of The End, Bagleys or Turnmills. So I love the look of this immersive experience in Barcelona scored by The Chemical Brothers. When they were just DJs I used to go see the Chemicals at London’s Turnmills most weekends between 1994 to 1996. It was my defining club-night of the bit between 16 and 18 when I shouldn’t really have been out at all. Full of wild and wonderful nights out. I never much listened to them as a band, but by random chance ended up in one of their music videos for about three seconds a couple of years later. Yes reader, the natty orange Diesel tracksuit top and gaunt, ghostly cheekbones in the background between 2’45” and 3’00” is none other than your humble scribe.

It’s a few years old now, but this interview with indie horror games maker Yames is a great insight into the creative process of one of the more disturbing creative minds out there in digital land. But also read it for what it is - an interview with an artist. If we could get content like this more read, more seen, any notion that games aren’t an artform would soon disappear.

Technology! // Kino-A-EYE

The real problem with Generative AI and video is where it compresses the creative process to unleash AI’s power of simulated creativity.

Text-to-video like Sora removes all that fun bit between script and storyboard, going straight from word to moving image.

It’s potentially a dream for tomorrow’s Hitchcock’s, Kubrick’s and Nolan - allowing the megalomaniac controller of images to rebuild and refine without any outward interference. Back in Issue 30 I talked about how this might unlock the novelist or poet of film - a kind of personal, inward film-making drawing on AI’s image-making capability to produce something wildly new and exciting.

But the novelist film-maker might also be very prone to the kind of coldness and inhumane care about form and image-making over uncovering human feeling that all three of those great film-makers, Hitch, Kubrick and Nolan have often been accused of.

The alternative to that kind of control is driven by exploratory, sometimes freeform camera movement. It’s something that doesn’t happen in planning but happens in the act of creation.

It’s a parallel cinematic tradition - from Dziga Vertov’s “Man with a Movie Camera” through Jean Renoir’s “Regle De Jeu”. In Michelangelo Antonioni’s “The Passenger” and Robert Altman’s “Nashville”. In Paul Thomas Anderson’s “Magnolia” and Gaspar Noe’s “Climax”.

Take the famous last shot from “The Passenger.” If you’ve not seen this, it’s one of the great singluar movies. Jack Nicholson plays a photographer who switches identity with a dead man and inhabits his life - ending the film being killed by gangsters in a lonely hotel room. It’s a deeply odd film - so quiet and brooding it’s virtually silent. Jack has never said so little.

The penultimaet shot is a masterpiece - the camera taking on a life of its own to leave the room where Nicholson will die, going off on an exploration of time and space around the hotel grounds.

Antonioni described the camera as his pen and this is its most vivid demonstration.

I really worry that this kind of film-making - the most powerful, most meaningful kind - might dissipate in the face of AI.

But maybe I’m wrong.

I’m really excited by the possibilities of putting AI not at the service of text-to-image but as a transformative partner IN THE CAMERA as this prototype from SpecialGuestX and 1stAveMachine does.

This strikes me as a much more potent way of unlocking AI’s power - letting the free exploration of the camera meet the generative potential of AI head on to really unlock scene making.

It’s very early, but this is an area to keep an eye on - a new device for a new way of seeing.